CISOs interested in having a better understanding of security analytics, such as Machine Learning (ML) or User and Entity Behavior Analytics (UEBA), have limited — or shall we say poor — sources of information. They can only find either 1) technical and academic research papers that are a bit… dry, or 2) vendors’ marketing literature that usually equates advanced analytics with some type of magic wand that can “automagically” solve all the security problems in the world.

This article aims to equip CISOs and cybersecurity directors with a base level of information to:

- Understand how security analytics can help them in a key pillar of their mission: to make sure that the organization can efficiently detect, investigate, and respond to threats and incidents.

- Spot fake UEBA from a mile away.

Classifying analytics based on sophistication

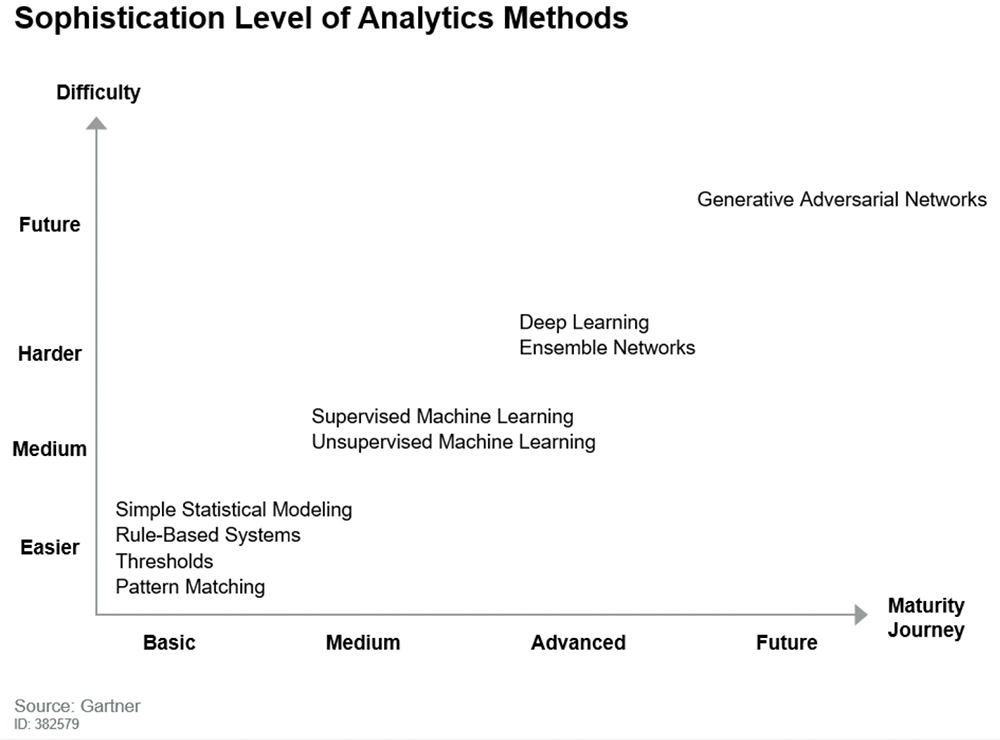

There are several types of analytics. Some are more powerful than others, and each has their pros and cons. These analytics methods can be classified and ranked based on their level of sophistication, which usually translates to their effectiveness.

When I was at Gartner, I authored a research note titled “How to Build Security Use Cases for Your SIEM”. In that research note, I defined the following table to capture the main analytics methods, based on how difficult they are to comprehend, and the maturity journey of the security organization.

Some of the basic methods were invented a long time ago. As an example, correlation rule-based systems have been in production since at least the year 2000, while thresholds and pattern matching (aka “signature-based detections”) are even older. Modern attacks have evolved to evade these legacy detections — even correlations are easily bypassed. However, all these methods are still standard in today’s security tools.

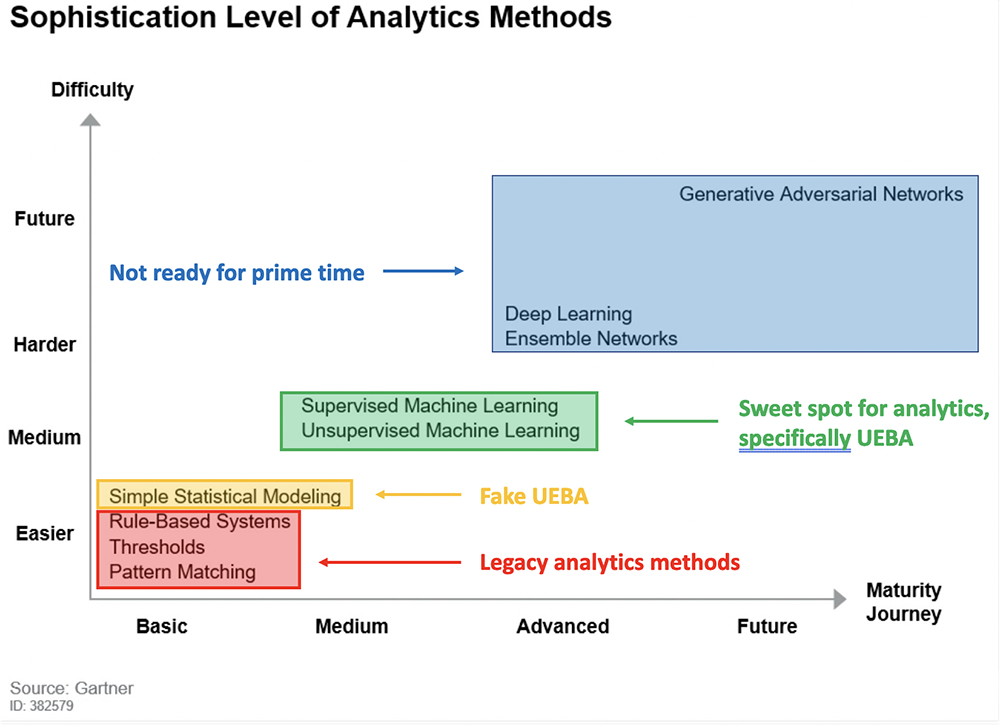

On the other hand, some of the more sophisticated methods such as deep learning, ensemble networks and generative adversarial networks (GANs) can be very effective. However, they are only used on a narrow and very specific scope and are often not ready to be deployed across the whole organization.

The sweet spot for security analytics today is machine learning, both supervised and unsupervised ML. The poster child for machine learning methods is User and Entity Behavior Analytics (UEBA), which analyzes the behavior of users and entities using machine learning. The ML engine computes baseline and normal behaviors across the organization, and flags anomalous behaviors worth investigating. UEBA has been in the market long enough to have proven very effective at detecting threats and incidents.

Analytics in depth

These analytics methods are not mutually exclusive, and maximum protection is achieved by running several of these analytics in conjunction. This can be referred to as “analytics-in-depth”, similar to the “defense-in-depth” approach that is prevalent in organizations.

A popular defense-in-depth approach for analytics has emerged based on complexity versus effectiveness. It combines the main methods in the basic category (pattern matching, thresholds, correlation rule-based systems), supplemented by statistical modeling and machine learning — both supervised and unsupervised — that real UEBA offers.

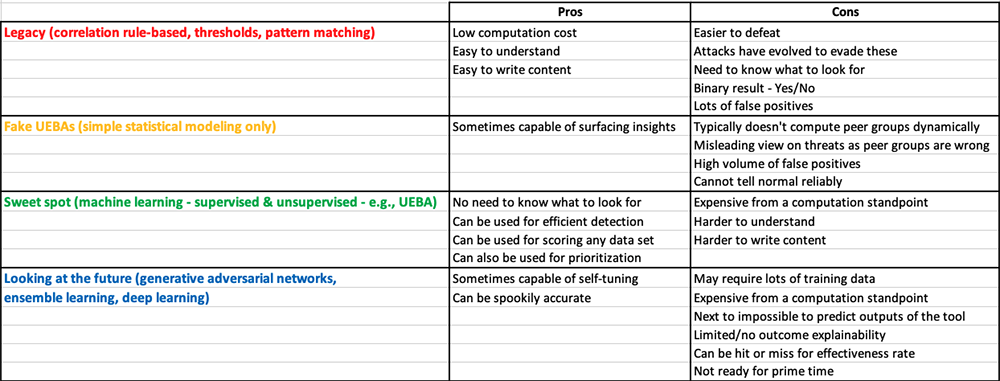

Pros and cons of different analytics methods

The output of the basic methods of pattern matching, thresholds and correlation rule-based systems is binary, and an alert is triggered as soon as a specific condition is met:

- Pattern matching: as soon as the data has matched a specific pattern, an alert is generated

- Thresholds: as soon as the data has reached or crossed a specific threshold, an alert is generated

- Correlation rule-based systems: as soon as the data has matched a specific “if – then – else” rule, an alert is generated

With any of these methods, either the required condition is met and an alert is generated, or the condition is not (yet) met, and the system is silent. It’s pretty crude, really. Another drawback is that organizations need to know what to look for – in advance. Indeed, the specific condition that is supposed to trigger the alert needs to be programmed – in advance. But how can organizations know how they will be attacked?

Simple statistical modeling is capable of raising some insights on its own (i.e., without asking it to look for something specific); however it is not an effective analytics approach when used in isolation, as it is plagued with false positives (as well as false negatives). We see it often embedded as part of a defense-in-depth approach. Beware of vendors who call their simple statistical modeling “UEBA”; it is not a real UEBA. More on this below.

Machine learning methods are much more granular and use powerful algorithms to understand “normal” and continuously score data and flows of data against ML models, without having to ask it to look for specific conditions. This allows for constant risk scoring and prioritization of risks across the whole organization.

Pros and cons can be described in the table below:

We can now revisit Figure 1 and identify the sweet spot for security analytics:

UEBA beyond detection

UEBA has been a real breakthrough for detecting threats and incidents. It allows organizations to be alerted when an anomaly is notable enough that it deserves attention from the security team. The best UEBA can actually do much more.

At their core, the best UEBA solutions are machine learning engines that understand “normal” and score every single event, user, and entity against that baseline with high fidelity and high accuracy. This can be used not only for detection, but along the full threat detection, investigation and response (TDIR) lifecycle that security operations teams perform in their organizations.

Specifically:

- Detection — via understanding of normal behaviors, and identification of anomalous or risky behaviors

- Triage of incidents — via scoring and prioritization of alerts generated across the whole organization, including from third-party tools

- Investigation — via stitching of all the normal and abnormal events into the operational model of a particular incident

- Response — via complementing SOARs’ static playbooks with decision support for most likely appropriate actions

Two simple ways to uncover “fake” UEBA

Readers should beware of “fake” UEBA in the market, as many vendors seem to exaggerate what they really have. “Fake” UEBA is nothing more than standalone simple statistical modeling methods marketed as UEBA. There are two easy litmus tests that organizations can do to uncover fake UEBA.

Gartner gives specific guidance in their “Market Guide for User and Entity Behavior Analytics (UEBA)” (Gartner subscription required). In this research paper, Gartner offers a simple litmus test to uncover true versus fake UEBA. In true UEBA, peer grouping needs to be done dynamically instead of statistically.

When a vendor approaches you selling their artificial intelligence (AI), their machine learning, or their UEBA, ask them how they build peer groups. If they build groups only by pulling information from Active Directory or an LDAP, and they assign groups based on this context data, they are selling fake UEBA! If they build peer groups dynamically by defining profiles of like users and like assets by calculating neighbors along hundreds of parameters, then this very well might be true UEBA. When the time comes for the analytics to compute behaviors against peers, this will make all the difference between a tool capable of generating an interesting insight, and a tool generating a bunch of noise.

Another simple litmus test. Ask your UEBA to tell you what “normal” is. If your UEBA cannot tell you what the normal behavior is for any user, any identity, and/or any asset at any moment, then it is likely a “fake” UEBA. True UEBA is able to detect anomalies and abnormal behavior, but also surface what is normal.

Exabeam is a pioneer in UEBA

Exabeam has a history of innovation and disruption in the security analytics space with the first UEBA that:

- Is part of an analytics-in-depth approach that collectively includes statistical modeling, correlation rule-based systems, thresholds, and pattern matching

- Understands “normal” for users and entities

- Alerts on notable anomalies that deserve the attention of the cybersecurity teams

- Prioritizes third-party alerts with “dynamic alert prioritization” to surface the most relevant alerts

- Enriches and contextualizes all logs and events via advanced analytics rather than via static playbooks

- Is bundled with valuable content for maximum efficiency of detection, investigation and response